This section presents a method for solving maximum-flow problem. The method depends on the three important ideas relevant to flow algorithms and problems: residual networks, augmenting paths, and cuts.

The Ford-Fulkerson method iteratively increases the value of the flow. We start with

for all

for all

, giving an initial value of 0. At each iteration, we increase the flow value in

, giving an initial value of 0. At each iteration, we increase the flow value in  by finding an “augmenting path” in an associated “residual network”

by finding an “augmenting path” in an associated “residual network”  . Once we know the edges of an augmenting path in

. Once we know the edges of an augmenting path in  , we can easily identify specific edges in

, we can easily identify specific edges in  for which we can change the flow so that we increase the value of the flow. Although each iteration of the F-F (Ford-Fulkerson) method increases the value of the flow, we shall see that the flow on any particular edge of

for which we can change the flow so that we increase the value of the flow. Although each iteration of the F-F (Ford-Fulkerson) method increases the value of the flow, we shall see that the flow on any particular edge of  may increase or decrease; decreasing the flow on some edges may be necessary in order to enable an algorithm to send more flow from the source to sink in other edge. We repeatedly augment the flow until the residual network has no more augmenting path. The max-flow min-cut theroem will show that upon termination, this process yields a maximum flow (see algorithms

may increase or decrease; decreasing the flow on some edges may be necessary in order to enable an algorithm to send more flow from the source to sink in other edge. We repeatedly augment the flow until the residual network has no more augmenting path. The max-flow min-cut theroem will show that upon termination, this process yields a maximum flow (see algorithms ![[*]](crossref.png) ).

).

![\begin{algorithm}

% latex2html id marker 198

[h]

\caption{Ford-Fulkerson algorit...

... \STATE update $f$

\ENDWHILE

\STATE return $f$

\end{algorithmic}\end{algorithm}](img71.svg)

We introduce following new concepts, in order to understand the above algorithm.

Residual networks. Given a flow  , the residual network

, the residual network  consists of edges with capacities that represent how we can change the flow on edge of

consists of edges with capacities that represent how we can change the flow on edge of  . An edge of the flow network can admit an amount of additional flow equal to the edge's capacity minus the flow on the edge. If that value is positive (

. An edge of the flow network can admit an amount of additional flow equal to the edge's capacity minus the flow on the edge. If that value is positive ( ), we place that edge into

), we place that edge into  with a “residual capacity”

with a “residual capacity”

. The only edges of

. The only edges of  that are in

that are in  are those that can admit more flow; those edges

are those that can admit more flow; those edges  whose flow equals their capacity have

whose flow equals their capacity have

, and they are not in

, and they are not in  . Note that, in this way, all paths constructed may not be connected.

. Note that, in this way, all paths constructed may not be connected.

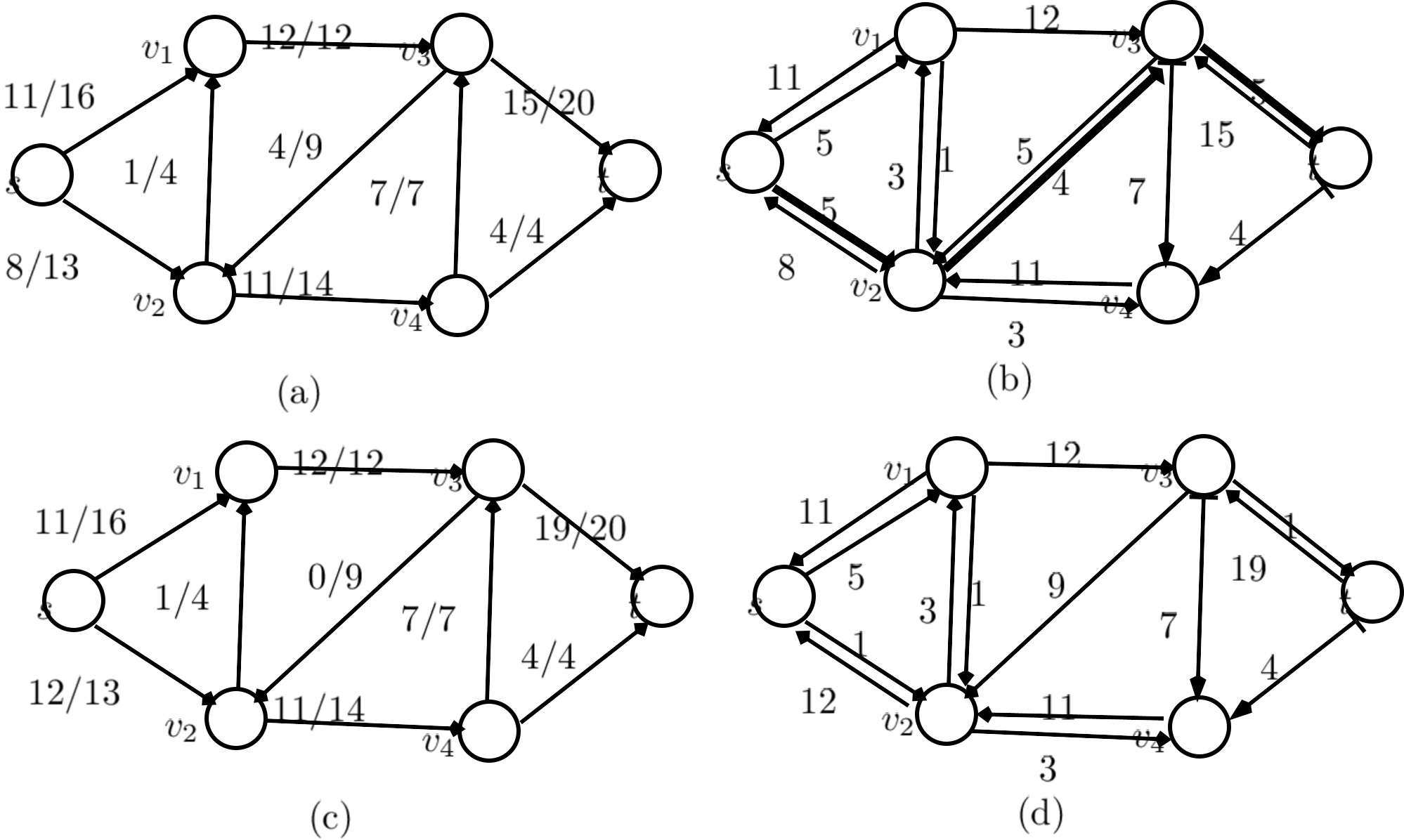

Consider the Figure ![[*]](crossref.png) (a), as a graph

(a), as a graph  with flows on each edge

with flows on each edge  is marked as

is marked as  , where

, where  is flow

is flow  and

and  is capacity of edge

is capacity of edge  expressed as

expressed as  . We would now construct a resudual network

. We would now construct a resudual network  from

from  .

.

The residual network  , may also contain edges that are not in

, may also contain edges that are not in  , however. Such edges will be only

, however. Such edges will be only  if

if  already exists in

already exists in  . As an algorithm manipulates the flow, with the goal of increasing the total flow, it might need to decrease the flow on a particular edge. That is, you can reduce that flow, to the maximum extent which is equal to the original

. As an algorithm manipulates the flow, with the goal of increasing the total flow, it might need to decrease the flow on a particular edge. That is, you can reduce that flow, to the maximum extent which is equal to the original  , and not more1. In order to represent a possible decrease of a positive flow

, and not more1. In order to represent a possible decrease of a positive flow  on an edge in

on an edge in  , we place an edge

, we place an edge  into

into  with residual capacity

with residual capacity

, that is, an edge that can admit flow in the opposite direction to

, that is, an edge that can admit flow in the opposite direction to  , at most canceling out the flow on

, at most canceling out the flow on  . These reverse edges in the residual network allow an algorithm to send back flow it has already sent along an edge. Sending flow back along an edge is equivalent to decreasing the flow on the edge, which is a necessary operation in many algorithms. Having completed this operation, the new flow graph is shown in Figure

. These reverse edges in the residual network allow an algorithm to send back flow it has already sent along an edge. Sending flow back along an edge is equivalent to decreasing the flow on the edge, which is a necessary operation in many algorithms. Having completed this operation, the new flow graph is shown in Figure ![[*]](crossref.png) (b). This has been done for all the edges, except those which were running at maximum capacity.

(b). This has been done for all the edges, except those which were running at maximum capacity.

More formally, suppose that we have a flow network

with source

with source  and sink

and sink  . Let

. Let  be a flow in

be a flow in  , and consider a pair of vertices

, and consider a pair of vertices

, we define the residual capacity

, we define the residual capacity

by

by

|

(2.6) |

Because our assumption that

implies

implies

, exactly one case in equation

, exactly one case in equation ![[*]](crossref.png) applies to each ordered pair vertices. As an example, if in this equation,

applies to each ordered pair vertices. As an example, if in this equation,

and

and

, then we can increase

, then we can increase  by up to

by up to

units before we exceed the capacity constraint on edge

units before we exceed the capacity constraint on edge  . We also wish to allow an algorithm to return up to 11 units of flow from

. We also wish to allow an algorithm to return up to 11 units of flow from  to

to  , and hence

, and hence

. This is with

. This is with

in figure

in figure ![[*]](crossref.png) (b).

(b).

Given the flow network

and a flow

and a flow  , the residual network of

, the residual network of  induced by

induced by  is

is

, where,

, where,

|

(2.7) |

This is as promised above, each edge of the residual network, or residual edge, can admit a flow that is greater than 0.

Figure ![[*]](crossref.png) (a), (b), (c), respectively show the, flow network

(a), (b), (c), respectively show the, flow network  , residual network

, residual network  .

.

Figure:

(a)Flow network  with flow

with flow  , (b) Residual network

, (b) Residual network  with augmenting path

with augmenting path  (shaded), its residual capacity is

(shaded), its residual capacity is

, edges with residual capacity equal to 0, such as

, edges with residual capacity equal to 0, such as

, are not shown, (c) The flow in

, are not shown, (c) The flow in  that results from augmenting along path

that results from augmenting along path  by its residual capacity

by its residual capacity  . Edges carrying no flow, such as

. Edges carrying no flow, such as

, are labeled by

, are labeled by

, (d) The residual network induced by the flow in (c).

, (d) The residual network induced by the flow in (c).

|

|

We increase the flow on  by

by

but decrease it by

but decrease it by

because pushing flow on the reverse edge in the residual network signifies decreasing the flow in the original network as cancellation. For example, in a data flow network, if we send 5 packets from

because pushing flow on the reverse edge in the residual network signifies decreasing the flow in the original network as cancellation. For example, in a data flow network, if we send 5 packets from  to

to  , and send send 2 from

, and send send 2 from  to

to  , we could equivalently just send 3 packets from

, we could equivalently just send 3 packets from  to

to  and none from

and none from  to

to  .

.

In figure ![[*]](crossref.png) (b), we can add the flow in forward direction by difference amount, e.g., on edge

(b), we can add the flow in forward direction by difference amount, e.g., on edge  we can add 5 units, and at any time we can undo the forward flow by 8 units.

we can add 5 units, and at any time we can undo the forward flow by 8 units.

After augmenting graph in figure ![[*]](crossref.png) (a) with the augmenting path

(a) with the augmenting path

, having augmented flow of

, having augmented flow of  , it cancels existing flow in edge

, it cancels existing flow in edge

, hence flow in edge

, hence flow in edge

is zero.

is zero.

![[*]](crossref.png) ).

).

![[*]](crossref.png) (a), as a graph

(a), as a graph

![[*]](crossref.png) (b). This has been done for all the edges, except those which were running at maximum capacity.

(b). This has been done for all the edges, except those which were running at maximum capacity.

![[*]](crossref.png) applies to each ordered pair vertices. As an example, if in this equation,

applies to each ordered pair vertices. As an example, if in this equation,

![[*]](crossref.png) (b).

(b).

![[*]](crossref.png) (a), (b), (c), respectively show the, flow network

(a), (b), (c), respectively show the, flow network

![[*]](crossref.png) (b), we can add the flow in forward direction by difference amount, e.g., on edge

(b), we can add the flow in forward direction by difference amount, e.g., on edge

![[*]](crossref.png) (a) with the augmenting path

(a) with the augmenting path